RFM Framework: The marketing Swiss-knife

Why RFM is the marketing Swiss-knife?

Unlike more expensive (resource / compute) predictive models for specific marketing objectives (e.g. churn, upsell etc.); the good old RFM framework is proven (for past several decades) to be one framework which can be utilized for several marketing objectives simultaneously aka:

- Identification of customers churning / going to competition.

- Optimization of marketing campaign costs (right offer to right segment).

- Understand the low hanging fruits (profitable segments).

- Establish the Pareto phenomenon (80 – 20 rule).

- Remarketing and retargeting campaigns.

- Increase loyalty and user engagement.

- Establish early adopters for new product launches.

- Improvement of customer value over lifetime.

- Map the life cycle / maturity of customer segment.

- Identifying / ring-fencing the most valuable customer segment.

With so many actionable coming out of one framework / one effort; it makes lot of sense for any digital business (e-Commerce, Fintech, marketplace etc.) to develop, maintain and monitor RFM regularly. In my experience RFM can be a fantastic starting point to embark on data driven marketing and CLVM initiatives.

Prologue : Two facets of Customer management

Three choices : Spending to acquire new customers (7 X more expensive) or maintain the existing customers through focused customer value management (at X cost) or balance these 2 facets.

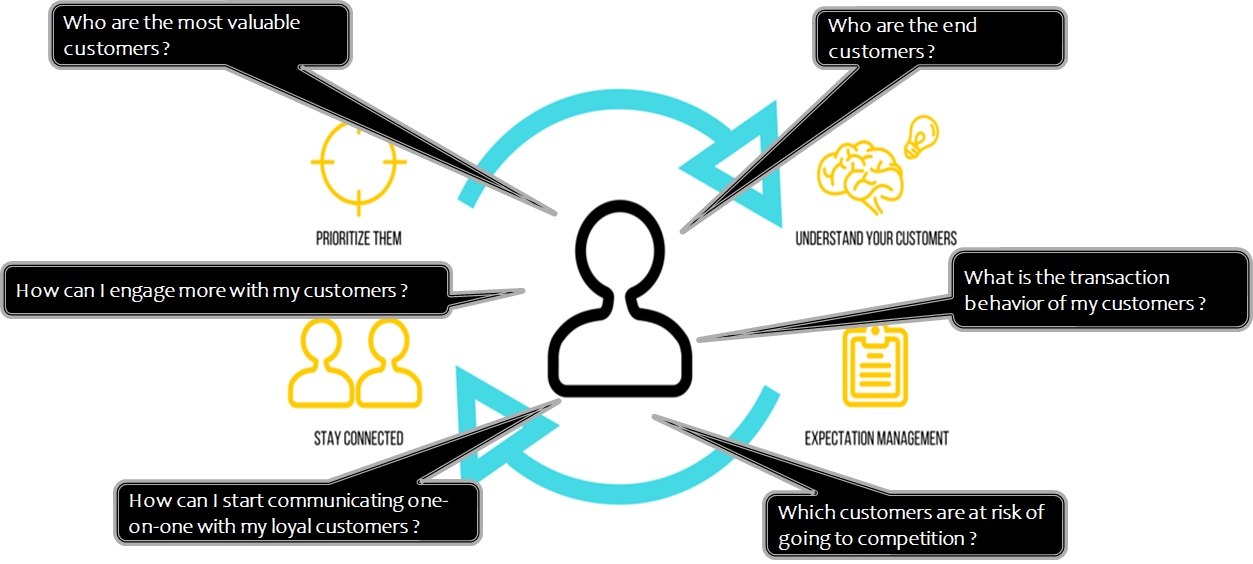

Business Intent : What do we want to know about our customers

Who are our customers and what can we do for them to keep them engaged

Basics of RFMC

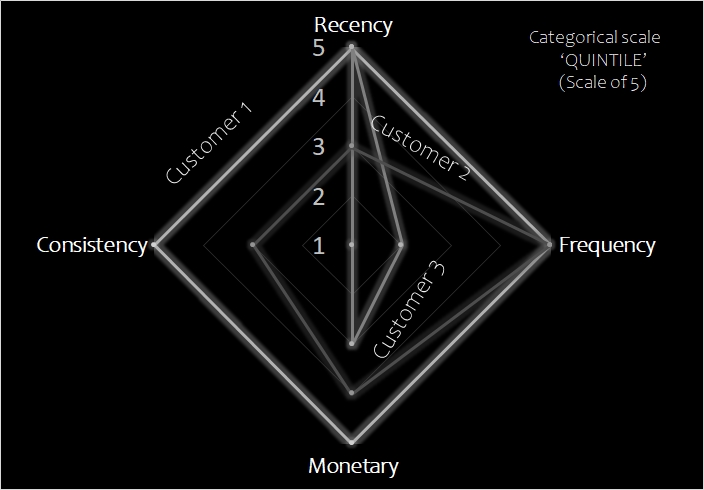

The goal of RFMC Analysis is to segment customers based on transaction behavior. To do this, we need to understand the historical actions of individual customers for each RFMC factor. We then rank customers based on each individual RFMC factor, and finally pull all the factors together to create RFMC segments for targeted marketing.

Recency : How recently a customer transacted. (measure : time (day) since last activity)

Frequency: How many times a customer transacted. (measure : count of distinct transactions)

Monetary: Value (in money sense) of transaction. (measure : sum of transaction value)

Consistency: The regularity of transactions. (measure : distinct number of days (or weeks)

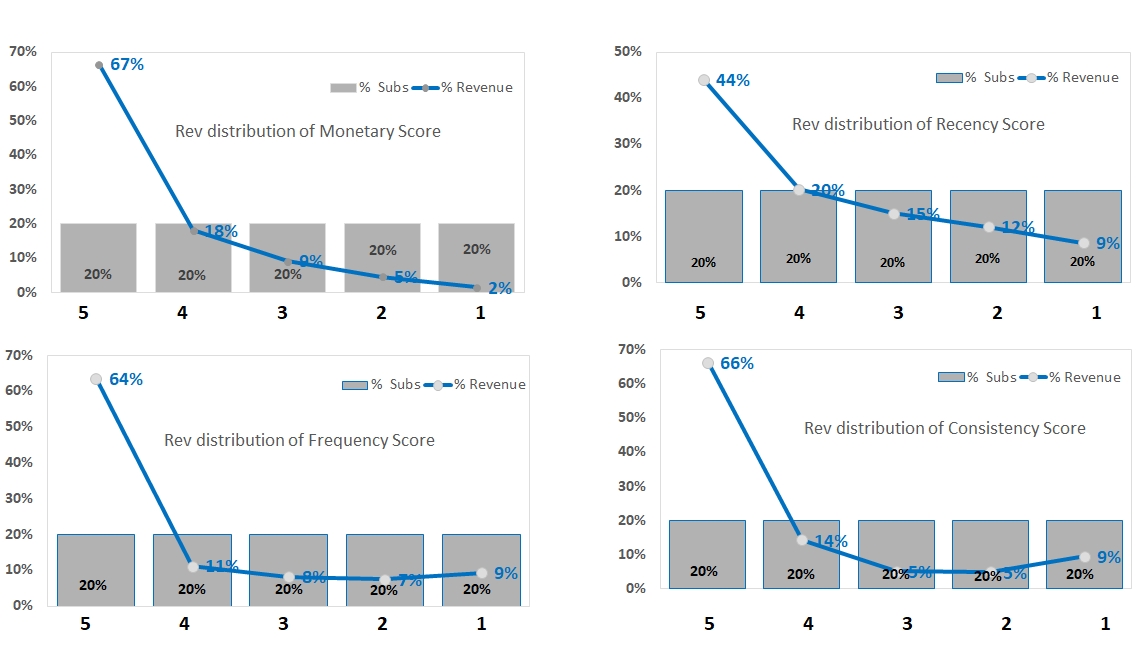

RFM Summary (Indicative Pattern)

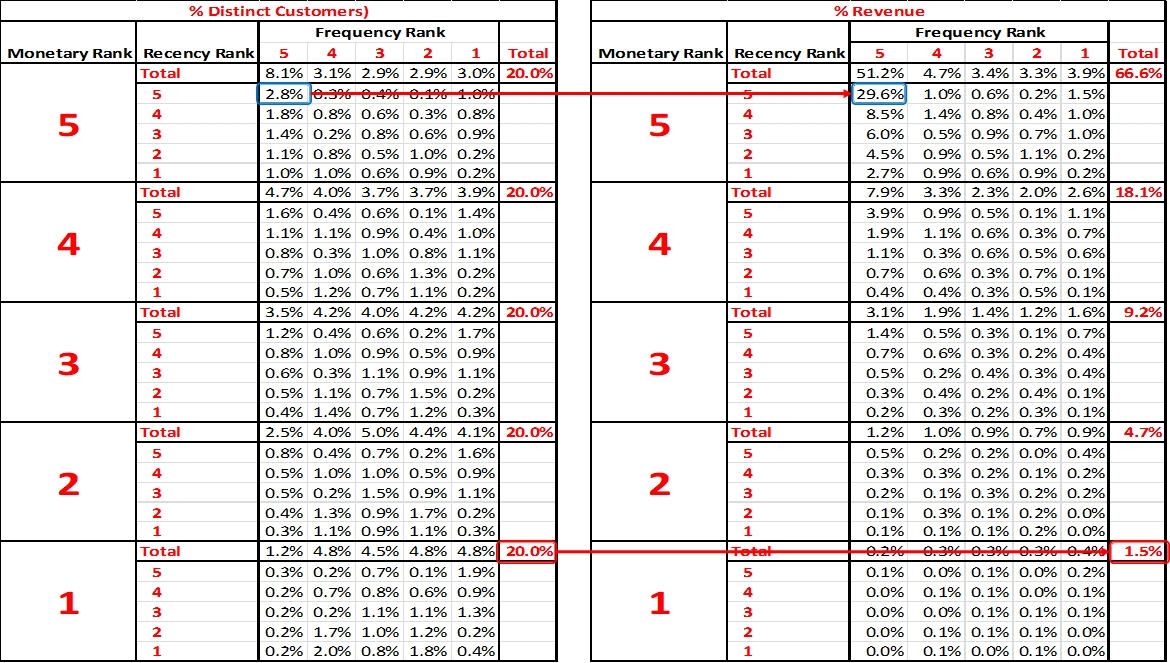

20% of Customers contributing to 66.6% of revenue in top and only 1.5% of revenue in the bottom. Clearly not all customers can be treated the same way.

Commercial indicators from RFMC model (2 Dimensional)

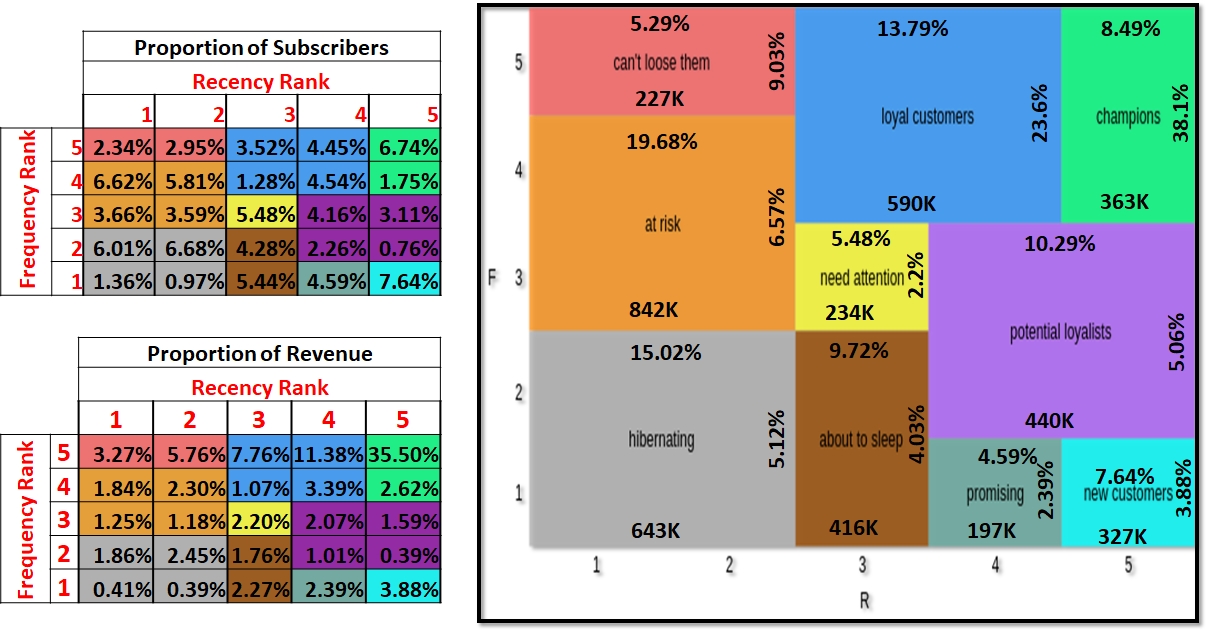

10 Clear segments of Customer behavior just on 2 dimensions (Frequency and Recency). Using all 4 dimensions results in many micro-segments.

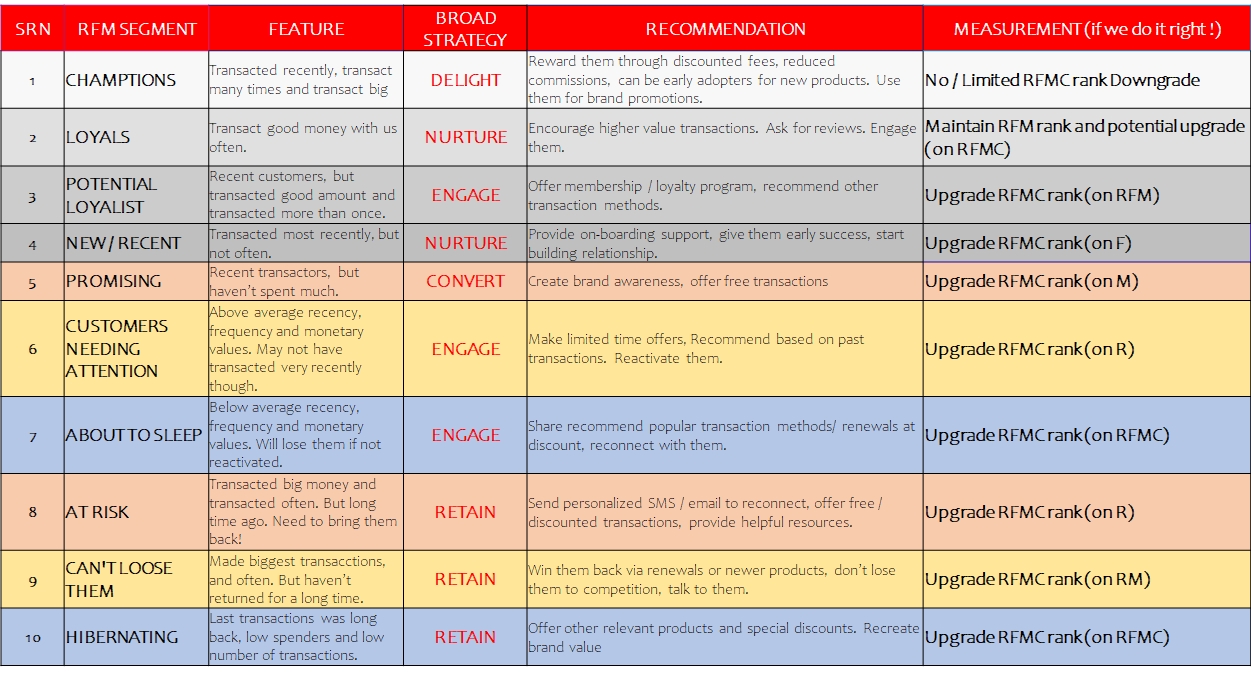

Recommended Actions - RFMC

Conclusion

While the development of RFM is relatively simpler; the output of this framework appeals immediately to many data savvy marketing professionals. For every digital / customer facing organizations; RFM does gets executed either in different components (campaigns based on recency for example) or as a single framework.

Additionally the digital / mobile marketing platforms (e.g. clevertap, leanplum etc.) offer RFM as part of their standard specialized offering.

- https://automationclinic.com/how-amazon-uses-recency-to-skyrocket-repeat-purchases/

- https://www.researchgate.net/publication/228399859_A_review_of_the_application_of_RFM_model

- https://www.researchgate.net/publication/332381743_A_Case_Study_of_Fintech_Industry_A_Two-Stage_Clustering_Analysis_for_Customer_Segmentation_in_the_B2B_Setting

- Python code examples for RFM at www(dot)GitHub(dot)com